最近工作上遇到了和TCP/IP协议紧密相关的任务,所以,得再去好好学习学习TCP/IP协议,懂了原理之后才好对后面的发现的问题进行处理(正所谓“谋定而后动”,而不是一上来就开始编码,然后发现问题没理解清楚,之前的编码也就是做了无用功……)

Step.1 确定搜索关键字

tcp/ip protocol

tcp header struct

ip header struct

use python to capture pcap file

use python to capture http trafic

tcp packet reassemble

reassemble tcp packet

reassemble tcp segments

python http packet reassembly

http request/response parse

site:github.com parse http response

site:stackoverflow.com reassemble tcp segment

site:drops.wooyun.org python 网络

…

Step.2 和协议相关的讲解

- HTTP – Hypertext Transfer Protocol Overview

- Internet protocol suite – Wikipedia, the free encyclopedia

- The TCP/IP Guide – TCP/IP Protocols

- IP Packet Structure

- TCP Header Format

- TCP/IP协议头部结构体(网摘小结)

- IP头,TCP头,UDP头,MAC帧头定义

- Packet splitting and reassembly

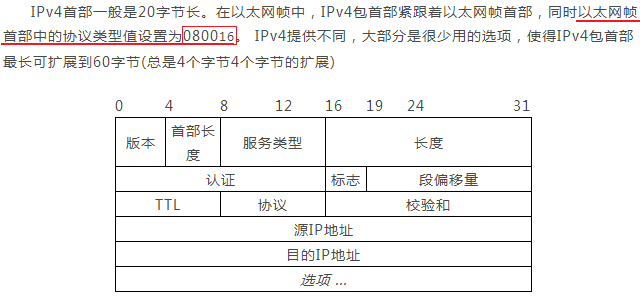

IP包的首部结构(这里暂时只讨论常用、简单的IPv4,IPv6暂时不涉及):

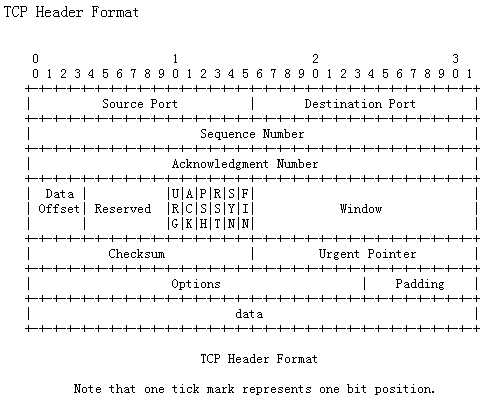

TCP包的首部结构:

Step.3 用Python进行简单的网络编程

这里主要参考从网上找出的一些例子:

- Google、AOL、Bing、Baidu搜索

- Stackoverflow上的问题

- GitHub上的一些代码

代码一:用pcap进行抓包、用dpkt进行解包

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import socket

import dpkt

import pcap

pc = pcap.pcap() #设置监听网卡,如:eth0

pc.setfilter('tcp') #设置监听过滤器

try:

for ptime, pdata in pc: #ptime为收到时间,pdata为收到数据

eth = dpkt.ethernet.Ethernet(pdata)

if eth.type != 2048 and eth.data.p != 6: #只处理以太网IP协议 & TCP协议,和下面的表达等价

#if eth.type != dpkt.ethernet.ETH_TYPE_IP and ip.p != dpkt.ip.IP_PROTO_TCP:

continue #

ip = eth.data

tcp = ip.data

src_ip = socket.inet_ntoa(ip.src)

src_port = tcp.sport

dst_ip = socket.inet_ntoa(ip.dst)

dst_port = tcp.dport

if tcp.dport == 80 and len(tcp.data) > 0:

http = dpkt.http.Request(tcp.data)

print http.method, http.uri, len(http.body)

if tcp.sport == 80 and len(tcp.data) > 0:

http_r = dpkt.http.Response(tcp.data)

print http_r.status, len(http_r.body)

except Exception as e:

print "Error", e

代码二:用socket进行处理

#!/usr/bin/env python

# coding=utf-8

# Packet sniffer in python for Linux

# Sniffs only incoming TCP packet

import socket, sys

from struct import *

#create an INET, STREAMing socket

try:

s = socket.socket(socket.AF_INET, socket.SOCK_RAW, socket.IPPROTO_TCP)

except socket.error, msg:

print 'Socket could not be created. Error Code : ' + str(msg[0]) + ' Message ' + msg[1]

sys.exit()

# receive a packet

while True:

packet = s.recvfrom(65565)

#packet string from tuple

packet = packet[0]

#take first 20 characters for the ip header

ip_header = packet[0:20]

#now unpack them :)

iph = unpack('!BBHHHBBH4s4s', ip_header)

version_ihl = iph[0]

version = version_ihl >> 4

ihl = version_ihl & 0xF

iph_length = ihl * 4

ttl = iph[5]

protocol = iph[6]

s_addr = socket.inet_ntoa(iph[8]);

d_addr = socket.inet_ntoa(iph[9]);

print 'Version : ' + str(version) + ' IP Header Length : ' + str(ihl) + ' TTL : ' + str(ttl) + ' Protocol : ' + str(protocol) + ' Source Address : ' + str(s_addr) + ' Destination Address : ' + str(d_addr)

tcp_header = packet[iph_length:iph_length+20]

#now unpack them :)

tcph = unpack('!HHLLBBHHH', tcp_header)

source_port = tcph[0]

dest_port = tcph[1]

sequence = tcph[2]

acknowledgement = tcph[3]

doff_reserved = tcph[4]

tcph_length = doff_reserved >> 4

print 'Source Port : ' + str(source_port) + ' Dest Port : ' + str(dest_port) + ' Sequence Number : ' + str(sequence) + ' Acknowledgement : ' + str(acknowledgement) + ' TCP header length : ' + str(tcph_length)

h_size = iph_length + tcph_length * 4

data_size = len(packet) - h_size

#get data from the packet

data = packet[h_size:]

print type(data), len(data), data

代码三:用http_parser进行处理

#!/usr/bin/env python

import socket

# try to import C parser then fallback in pure python parser.

try:

from http_parser.parser import HttpParser

except ImportError:

from http_parser.pyparser import HttpParser

def main():

p = HttpParser()

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

body = []

try:

s.connect(('ixyzero.com', 80))

s.send("GET / HTTP/1.1rnHost: ixyzero.comrnrn")

while True:

data = s.recv(1024)

if not data:

break

recved = len(data)

nparsed = p.execute(data, recved)

assert nparsed == recved

if p.is_headers_complete():

print p.get_headers()

if p.is_partial_body():

body.append(p.recv_body())

if p.is_message_complete():

break

print "".join(body)

finally:

s.close()

if __name__ == "__main__":

main()

代码四:用Scapy进行处理

#!/usr/bin/env python

'''

http://www.secdev.org/projects/scapy/

https://github.com/invernizzi/scapy-http

'''

from scapy.all import *

from scapy.error import Scapy_Exception

import scapy_http.http

m_iface="eth0"

count=0

def pktTCP(pkt):

global count

count=count+1

if scapy_http.http.HTTPConnection or scapy_http.http.HTTPResponse in pkt:

src=pkt[IP].src

srcport=pkt[IP].sport

dst=pkt[IP].dst

dstport=pkt[IP].dport

test=pkt[TCP].payload

if scapy_http.http.HTTPConnection in pkt:

print "HTTP Request:"

print test

print "============================================================"

if scapy_http.http.HTTPResponse in pkt:

print "HTTP Response:"

print test

print "============================================================"

sniff(filter="tcp and ( port 80 or port 8080 )", iface=m_iface, prn=pktTCP)

上面代码的最大问题就在于,本身并没有多少处理“TCP segments 重组”的逻辑,或者是依赖的库实现了,或者是根本就没实现,但是,对于一个需要HTTP响应体的需求来说,上面的代码功能全都无法满足,但是,自己要在短时间内实现一个稳定的TCP包重组功能,明显也不现实,所以,这时候我需要寻求新的解决办法。

Step.4 相关的工具/库

- https://jon.oberheide.org/pynids/

- http://libnids.sourceforge.net/

- http://tcpreplay.synfin.net/

- http://justniffer.sourceforge.net/

- https://github.com/simsong/tcpflow

- https://github.com/jwiegley/scapy

- https://github.com/invernizzi/scapy-http

- https://github.com/jbittel/httpry

- https://github.com/benoitc/http-parser

- https://github.com/xiaxiaocao/pycapture

- http://code.google.com/p/pypcap/

- http://code.google.com/p/dpkt/

- sniffing network traffic in python

- tcpflow

flowgrep

ngrep

tcpkill

dsniff

driftnet - ……

Step.5 后面的路

暂定的工具是tcpflow,部分满足要求,细化的功能需要自己在源码的基础上进行修改,但总归有了个模版和目标,否则从头做起的话,恐怕是着不住哦!

附录A. 参考链接

Stackoverflow上的类似问题:

http://stackoverflow.com/questions/15906308/how-to-sniff-http-packets-in-python http://stackoverflow.com/questions/5216332/how-to-reassemble-tcp-packets-in-python http://stackoverflow.com/questions/4481914/reassembling-tcp-segments http://stackoverflow.com/questions/13017797/how-to-add-http-headers-to-a-packet-sniffed-using-scapy http://stackoverflow.com/questions/16279661/scapy-fails-to-sniff-packets-when-using-multiple-threads http://stackoverflow.com/questions/7155050/capture-tcp-packets-with-python http://stackoverflow.com/questions/25606358/how-to-and-reassemble-a-segmented-http-packet http://stackoverflow.com/questions/4750793/python-scapy-or-the-like-how-can-i-create-an-http-get-request-at-the-packet-leve http://stackoverflow.com/questions/15906308/how-to-sniff-http-packets-in-python http://stackoverflow.com/questions/4948043/pcap-python-library http://stackoverflow.com/questions/17616773/how-to-dump-http-traffic http://stackoverflow.com/questions/2259458/how-to-reassemble-tcp-segment http://stackoverflow.com/questions/692880/tcp-how-are-the-seq-ack-numbers-generated http://stackoverflow.com/questions/600087/can-libpcap-reassemble-tcp-segments http://stackoverflow.com/questions/12836944/how-wireshark-marks-some-packets-as-tcp-segment-of-a-reassembled-pdu http://stackoverflow.com/questions/5705058/watching-http-in-wireshark-whats-the-relation-between-reassembled-tcp-vs-hyper http://stackoverflow.com/questions/2372365/is-there-a-way-to-save-a-reassembled-tcp-in-wireshark http://stackoverflow.com/questions/2650261/determining-http-packets http://stackoverflow.com/questions/9798120/how-to-reassemble-tcp-and-decode-http-info-in-c-code http://stackoverflow.com/questions/7411734/some-question-of-reassembling-tcp-stream http://stackoverflow.com/questions/2916612/reconstructing-data-from-pcap-sniff http://stackoverflow.com/questions/2346446/how-to-know-which-is-the-last-tcp-segment-received-by-the-server-when-data-is-tr http://stackoverflow.com/questions/756765/when-will-a-tcp-network-packet-be-fragmented-at-the-application-layer http://stackoverflow.com/questions/5658833/good-library-for-tcp-reassembly http://stackoverflow.com/questions/6151417/complete-reconstruction-of-tcp-session-html-pages-from-wireshark-pcaps-any-to http://stackoverflow.com/questions/8862196/network-sniffing-with-python

《 “TCP/IP协议的复习/回顾” 》 有 8 条评论

File2pcap – 随意指定文件,生成 Pcap 流量包的工具,支持多种协议

http://blog.talosintelligence.com/2017/05/file2pcap.html

https://github.com/Cisco-Talos/file2pcap

基于 Python dpkt 库,Parsing HTTP/2 协议数据包

https://gendignoux.com/blog/2017/05/30/dpkt-parsing-http2.html

一系列网络包/流量处理的工具

https://github.com/caesar0301/awesome-pcaptools

http://xiaming.me/awesome-pcaptools/

`

Linux commands

Traffic Capture

Traffic Analysis/Inspection

DNS Utilities

File Extraction

Related Projects

`

从零构建TCP/IP协议(这次叫PCT协议)

https://jiajunhuang.com/articles/2017_08_12-tcp_ip.md.html

从零构建TCP/IP协议(二)连接,断开与拥塞控制

https://jiajunhuang.com/articles/2017_09_02-tcp_ip_part2.md.html

软件工程师需要了解的网络知识:从铜线到HTTP(二)—— 以太网与交换机

https://lvwenhan.com/%E6%93%8D%E4%BD%9C%E7%B3%BB%E7%BB%9F/486.html

软件工程师需要了解的网络知识:从铜线到HTTP(三)—— TCP/IP

https://lvwenhan.com/%E6%93%8D%E4%BD%9C%E7%B3%BB%E7%BB%9F/487.html

关于TCP/IP,必知必会的十个问题

https://mp.weixin.qq.com/s/qn5fw8yHvjBou6Ps2Xo9Lw

`

一、TCP/IP模型

二、数据链路层

三、网络层

四、ping

五、traceroute

六、TCP/UDP

七、DNS

八、TCP连接的建立与终止

九、TCP流量控制

十、TCP拥塞控制

`

TCP/IP 完全掌握了么?来看看 CloudFlare 的面试题

https://paper.tuisec.win/detail/94b92e3a9f12cd1

http://valerieaurora.org/tcpip.html

http://blog.jobbole.com/87398/

https://blog.cloudflare.com/cloudflare-interview-questions/

TCP 三次握手原理,你真的理解吗?

https://mp.weixin.qq.com/s/yH3PzGEFopbpA-jw4MythQ

http://veithen.github.io/2014/01/01/how-tcp-backlog-works-in-linux.html

http://www.cnblogs.com/zengkefu/p/5606696.html

http://www.cnxct.com/something-about-phpfpm-s-backlog/

http://jaseywang.me/2014/07/20/tcp-queue-%E7%9A%84%E4%B8%80%E4%BA%9B%E9%97%AE%E9%A2%98/

http://blog.chinaunix.net/uid-20662820-id-4154399.html